All published articles of this journal are available on ScienceDirect.

A Healthcare System Employing Lightweight CNN for Disease Prediction with Artificial Intelligence

Abstract

Introduction/Background

This research introduces the EO-optimized Lightweight Automatic Modulation Classification Network (EO-LWAMCNet) model, employing AI and sensor data for forecasting chronic illnesses within the Internet of Things framework. A transformative tool in remote healthcare monitoring, it exemplifies AI's potential to revolutionize patient experiences and outcomes.

This study unveils a novel Healthcare System integrating a Lightweight Convolutional Neural Network (CNN) for swift disease prediction through Artificial Intelligence. Leveraging the efficiency of lightweight CNN, the model holds promise for revolutionizing early diagnosis and enhancing overall patient care. By merging advanced AI techniques, this healthcare model holds the potential for revolutionizing early diagnosis and improving overall patient care.

Materials and Methods

The Lightweight Convolutional Neural Network (CNN) is implemented to analyze sensor data in real-time within an Internet of Things (IoT) framework. The methodology also involves the integration of the EO-LWAMCNet model into a cloud-based IoT ecosystem, demonstrating its potential for reshaping remote healthcare monitoring and expanding access to high-quality care beyond conventional medical settings.

Results

Utilizing the Chronic Liver Disease (CLD) and Brain Disease (BD) datasets, the algorithm achieved remarkable accuracy rates of 94.8% and 95%, respectively, showcasing the robustness of the model as a reliable clinical tool.

Discussion

These outcomes affirm the model's reliability as a robust clinical tool, particularly crucial for diseases benefiting from early detection. The potential transformative impact on healthcare is emphasized through the model's integration into a cloud-based IoT ecosystem, suggesting a paradigm shift in remote healthcare monitoring beyond traditional medical confines.

Conclusion

Our proposed model presents a cutting-edge solution with remarkable accuracy in forecasting chronic illnesses. The potential revolutionization of remote healthcare through the model's integration into a cloud-based IoT ecosystem underscores its innovative impact on enhancing patient experiences and healthcare outcomes.

1. INTRODUCTION

In the modern global health arena, chronic diseases, notably those affecting the brain and liver, have risen as primary challenges that nations worldwide confront. World Health Organization (WHO) underscores the growing prevalence of chronic illnesses, noting their overshadowing of infections and other traditional health concerns [1, 2]. Brain diseases include a spectrum from neurodegenerative conditions like Alzheimer's to brain tumors, and liver diseases range from cirrhosis to hepatitis. These ailments profoundly impact individuals, their families, and societies, contributing significantly to global morbidity and mortality, with millions affected by their severe consequences each year. The complexity of predicting chronic diseases such as those affecting the brain and liver lies in the multitude of influencing factors, from genetic predispositions to environmental exposures [3, 4]. Early detection is crucial for managing and potentially mitigating these diseases, yet it remains challenging due to the subtle and often misleading nature of early symptoms. For example, initial signs of liver disease might present as mere fatigue or slight abdominal discomfort, easily confused with less serious conditions. Similarly, early indicators of brain diseases might be mistakenly attributed to normal aging. The advent of the Internet of Things (IoT)—a transformative technological innovation—promises to redefine healthcare delivery. IoT encompasses a vast network of interconnected devices that collect, transmit, and analyze data without human intervention, facilitating a new era of medical diagnostics and patient care [5, 6].

In our research, the application of Convolutional Neural Networks (CNNs) plays a pivotal role. CNNs are utilized for their advanced capabilities in feature extraction and classification, identifying complex patterns in medical data that are beyond the reach of conventional methods. These networks are specifically engineered to be lightweight and efficient for healthcare settings, focusing on diseases like Chronic Liver Disease (CLD) and Brain Disease (BD). By learning hierarchical data representations automatically, CNNs enhance the accuracy of disease predictions, aiding early detection and potentially improving outcomes. Convolutional Neural Networks (CNNs) have significantly advanced the field of medical diagnostics, particularly in the prediction and management of chronic diseases such as brain and liver disorders. As part of our study's technical contributions, CNNs are primarily used for their superior feature extraction and classification capabilities, which are essential for interpreting complex medical data [7].

CNNs excel in identifying intricate patterns within large datasets that traditional analytical methods might overlook. This capability stems from their deep learning architecture, which consists of multiple layers of processing units that mimic the human brain's neural structure. By processing data through these layers, CNNs can automatically learn and refine how they recognize patterns, making them exceptionally effective for medical imaging and predictive analytics. In the context of chronic diseases, where early detection plays a crucial role in successful management, CNNs offer a transformative advantage. They can detect subtle abnormalities in medical images, such as MRI scans or liver function tests, that might indicate the early stages of disease before clinical symptoms become apparent. This early detection is vital for conditions like liver cirrhosis or neurodegenerative diseases, where timely intervention can significantly alter the disease's trajectory and patient outcomes. Moreover, the study leverages lightweight CNN models that are optimized for healthcare applications. These models are designed to be computationally efficient, ensuring they can be deployed in real-world healthcare settings without requiring extensive hardware, making advanced diagnostics more accessible and timely. This aspect of CNN technology not only enhances diagnostic accuracy but also supports the healthcare sector's shift towards more proactive and preventive care strategies [8, 9].

This study bridges the gap between the pressing need for accurate disease prediction and the robust capabilities of IoT. By enabling real-time monitoring and data analysis, IoT devices provide healthcare professionals with invaluable insights into patient health, supporting more informed diagnoses and treatment strategies. This approach not only elevates the quality of care but also marks a shift from reactive to proactive healthcare, underscoring the transformative potential of technology in addressing chronic diseases. The continuous exploration of these technologies in global health research highlights their critical role in addressing the complexities of chronic disease management and the ongoing need for innovative solutions.

2. RELATED WORK

A segue into the role of technology in predicting diseases leads us to the vast and continually evolving realm of the Internet of Things (IoT) in healthcare. According to the research paper [10], the term “IoT” is attributed to the individual who coined it, representing the interconnectedness of devices and systems communicating over the internet. In recent years, healthcare has become a focal point for IoT applications. A study [11] provided an exhaustive review of how IoT devices, such as wearable sensors and embedded systems, offer an unprecedented opportunity to monitor patients in real-time, enabling timely interventions. These devices provide continuous streams of data, facilitating the proactive identification of health anomalies. But while IoT offers the mechanism for real-time data collection, the crux lies in deciphering this data to derive meaningful insights. This necessitates advanced computational methodologies, which is where Convolutional Neural Networks (CNNs) make an entrance. CNNs are a category of deep learning models, particularly adept at processing and analyzing visual imagery. A study [12] provided groundbreaking work on ImageNet and demonstrated the unparalleled capabilities of CNNs in image classification tasks. While traditionally associated with image processing, CNNs have recently been adopted in healthcare for processing medical images, predicting diseases, and even analyzing data from IoT devices [13].

The interface of IoT and CNNs in healthcare technology is particularly promising. A study [14] illustrated a model where data from wearable IoT devices was processed using CNNs to predict cardiac arrhythmias. Their approach showcased how real-time monitoring can be synergized with deep learning techniques to produce actionable insights for medical professionals. Another compelling work [15] delved into how a combination of real-time monitoring from IoT devices and the analytical prowess of CNNs could predict the early onset of neurodegenerative diseases, bridging the often fatal gap between early symptom manifestation and accurate disease diagnosis. However, while the promise is vast, challenges abound. Integrating IoT into healthcare is not without its hurdles. Data privacy, device interoperability, and the sheer volume of data generated pose significant challenges [16]. Furthermore, while CNNs have shown potential, ensuring their predictions are interpretable to healthcare professionals remains a work in progress [17]. Delving further into the intersection of healthcare and technology, we encounter numerous studies emphasizing the transformative potential of the Internet of Things (IoT). A study [18] elucidated the profound impact of IoT on personalized healthcare. Their research focused on ambient assisted living, wherein embedded devices facilitate the continuous monitoring of individuals, especially the elderly, ensuring timely interventions in case of health emergencies. This concept broadens the spectrum of healthcare, transitioning from hospital-centric to patient-centric models, ensuring care even outside traditional healthcare establishments. Additionally, the omnipresence of wearable devices and their incorporation into the health ecosystem can't be ignored. Another study [19] explored the rise of wearable technology, emphasizing the empowerment of patients through self-monitoring. Their study asserted that such devices have drastically improved patient engagement, especially in the realm of chronic diseases, where continuous monitoring can lead to better disease management and improved outcomes. As the debate around data collection via IoT intensifies, the conversation naturally gravitates towards its effective utilization. Disease prediction, in this context, is of paramount importance. A work [20] delved into this very facet, exploring the predictive analytics of data collected from various IoT sources. They posited that with the right algorithms, it's possible to not only predict the onset of diseases but also anticipate disease progression and potential complications, allowing for a comprehensive care plan. Given the vast influx of data from IoT devices, the role of advanced computational techniques becomes pivotal. The prominence of Convolutional Neural Networks (CNNs) in healthcare analytics has gained traction over recent years. In a detailed exploration, another study [21] showcased the adaptability of CNNs in medical imaging, detailing their applications in areas like tumor detection, organ segmentation, and disease classification. Their findings resonate with the broader academic consensus that CNNs, given their deep learning architecture, are uniquely poised to revolutionize medical diagnostics [22].

The primary objective of this paper is to introduce and evaluate the EO-LWAMCNet model, a cutting-edge AI tool integrated within an Internet of Things (IoT) framework. Designed to process real-time physiological sensor data, the model aims to accurately predict chronic conditions, particularly Chronic Liver Disease and Brain Disease, enhancing early detection and intervention in healthcare settings.

3. THE INTERNET OF THINGS (IoT) IN HEALTHCARE

Over the last ten years, the digital revolution has transformed numerous industries, including healthcare. The Internet of Things (IoT) is driving this change. The Internet of Things (IoT) offers never-before-seen data collection, processing, and exploitation possibilities. The word “IoT” refers to a wide range of internet-connected products, from home appliances to office equipment. The Internet of Things has had a huge impact on the healthcare business. Because symptoms are reactive in nature, traditional healthcare models have typically encouraged seeking therapy as soon as they emerge. As a result of the growth of IoT, there has been a paradigm shift in support of a more proactive strategy. A variety of physiological indications can be continuously monitored by connected devices with embedded sensors, enabling early identification and even prognosis of future health difficulties. Understanding how sensors work is required for using the Internet of Things in healthcare. These are sophisticated equipment designed to interpret observational data and identify specific physiologic or environmental changes [23, 24].

For instance, a wearable fitness tracker on one's wrist can measure heart rate, skin temperature, or even oxygen levels in real time. Similarly, more specialized sensors, like implantable glucose monitors, can continuously measure blood sugar levels, alerting the patient of any significant spikes or drops. These sensors, thus, act as the eyes and ears of the IoT ecosystem in healthcare, continuously capturing data that is vital for understanding a patient's health status. However, while data collection is an integral facet, its real-time transmission holds equal, if not greater, significance, especially when it comes to disease prediction. Delayed or batched transmission of data can lead to missed opportunities for early intervention. Imagine a scenario where a patient with a history of cardiac issues experiences abnormal heart rhythms. A sensor can detect this anomaly, but if this data isn't transmitted in real-time to a central monitoring system or to the healthcare provider, the window for timely intervention narrows, potentially leading to grave consequences. On the contrary, real-time data transmission ensures that such anomalies are instantly flagged, allowing for immediate medical attention.

The importance of real-time transmission extends beyond emergency scenarios. Chronic diseases, which often progress silently, can be better managed with continuous monitoring. Take diabetes, for example. A sensor that provides real-time data on blood sugar levels can offer insights into how different factors, such as food intake, exercise, or stress, influence these levels. Over time, this data can help in tailoring personalized treatment and management plans, improving patient outcomes significantly. Furthermore, in an age where telemedicine and remote consultations are gaining traction, the IoT plays a pivotal role. Patients in remote locations can be equipped with IoT devices that transmit their health data to specialists hundreds of miles away, ensuring they receive expert advice without the constraints of geographical boundaries. Yet, the role of IoT in healthcare isn’t without challenges. The sheer volume of data generated necessitates robust storage and processing solutions. Data security and privacy are paramount. With personal health data being continuously transmitted, ensuring its protection from potential breaches is essential. Additionally, the standardization of devices, ensuring interoperability and the creation of frameworks that can effectively analyze and interpret vast streams of data are areas that need continuous innovation. Moreover, as the global population ages, the strain on healthcare systems worldwide intensifies. IoT offers a glimmer of hope in this scenario. Elderly patients, especially those with chronic conditions, can benefit immensely from home-based IoT systems. Such systems can monitor their health, ensure medication adherence, and even detect falls or other emergencies, transmitting alerts to caregivers or medical professionals [25, 26].

The sensor landscape in healthcare IoT is varied and caters to an array of physiological parameters is listed in Table 1. This tabulation provides an overview of a few prominent sensors and their key specifications. Starting with Heart Rate Sensor's primary function is to measure the number of heartbeats per minute. Given that a typical resting heart rate for adults ranges from 60 to 100 beats per minute, this sensor has a sensing range of 30-240 BPM, ensuring it can detect both abnormally low and high heart rates. Its accuracy is within ±2 BPM, and it transmits data at a rate of 1 Hz, meaning it sends data once every second, which is crucial for real-time monitoring. Furthermore, it operates efficiently, requiring only a 3.3V power source and drawing a current of 1mA. The Glucose Monitor, vital for diabetics, keeps tabs on blood sugar levels. The sensing range of 40-400 mg/dL ensures it captures a wide range of glucose levels, from hypoglycemia to hyperglycemia. With an accuracy of ±10 mg/dL and a data transmission rate of 0.5 Hz (or once every two seconds), it provides a fairly real-time view of a patient's glucose levels.

| Sensor Type | Accuracy | Data Transmission Rate | Power Requirement |

|---|---|---|---|

| Heart Rate Sensor | ±2 BPM | 1 Hz | 3.3V, 1mA |

| Glucose Monitor | ±10 mg/dL | 0.5 Hz | 3.7V, 5mA |

| Temperature Sensor | ±0.1°C | 1 Hz | 3V, 10uA |

| Oxygen Saturation | ±2% | 1 Hz | 3.5V, 2mA |

| Motion Sensor | N/A | 10 Hz | 3V, 6uA |

The power specifications ensure it can function efficiently without draining the battery quickly. The Temperature Sensor offers insights into the body's core temperature, a key metric in detecting fever, inflammation, or hypothermia. Operating within a range of 32-42°C, it can detect subtle variations with an accuracy of ±0.1°C, transmitting this data every second. The low power requirement ensures longevity and sustained operation. Oxygen Saturation Sensors play a pivotal role, especially in conditions like COPD, and asthma, or even in monitoring the health of COVID-19 patients. Given that an oxygen saturation level below 90% is concerning, this sensor's range from 70-100% ensures it captures any significant drops. With a 2% margin of error and a transmission rate of 1 Hz, it provides a near real-time view of oxygen levels in the bloodstream. Lastly, the Motion Sensor, equipped to detect physical activity and falls, is particularly crucial for elderly patients. Its multi-axis detection allows for sensing movement in various directions. While it does not have a traditional 'accuracy' metric like the others, its high transmission rate of 10 Hz means it can rapidly detect and report any sudden movements or falls, ensuring timely interventions if needed.

4. METHODS

The EO-LWAMCNet, standing for “EO-optimized Lightweight Automatic Modulation Classification Network,” is an avant-garde model conceived to revolutionize the predictive capabilities in healthcare, specifically for chronic diseases. This proposed model leverages optimization techniques combined with the lightweight design of the network, ensuring efficient yet accurate disease predictions as described in Table 2.

| Component | Description | Functionality |

|---|---|---|

| Input Layer | Accepts sensor data from IoT devices | Data ingestion |

| EO Optimization Layer | Uses Evolutionary Optimization (EO) techniques to fine-tune network weights | Enhances network's predictive capability |

| Convolutional Layers | Multiple layers designed for feature extraction | Extracts relevant patterns from the data |

| Pooling Layers | Reduces dimensionality of the data | Compresses data and retains features |

| Fully Connected Layer | Dense layer where neurons are interconnected | Classification and prediction |

| Output Layer | Produces the final prediction result | Disease prediction (Normal/Abnormal) |

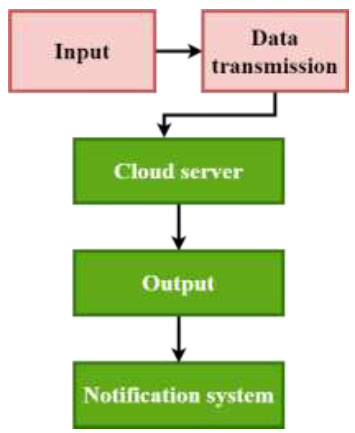

At its very core, the EO-LWAMCNet model is designed to handle vast influxes of data from IoT sensors, process this data to extract meaningful patterns, and subsequently make predictions about potential chronic diseases, as shown in Fig. (1). The architecture is particularly poised to handle the challenges presented by the varied nature of chronic disease symptoms, ensuring accurate predictions even when the symptoms might be subtle or ambiguous. Starting with the Input Layer, this is where the entire process begins. Data from IoT devices, whether it's a heart rate, glucose level, or temperature, is ingested into the network through this layer. Given the diverse range of sensors in the healthcare IoT landscape, this layer is crucially designed to handle heterogeneous data types, ensuring seamless integration. Post the data ingestion, the model utilizes the EO Optimization Layer. One of the primary challenges in neural networks is determining the optimal weights that would enhance the network's predictive capability. Traditional methods often rely on backpropagation, which might get stuck in local optima. The EO, or Evolutionary Optimization technique, is inspired by the principles of natural selection. By iterating over generations, it fine-tunes the network weights, ensuring they are optimized for the best possible prediction. This layer acts as the secret sauce of the EO-LWAMCNet model, giving it an edge over traditional CNNs. The architecture of CNN is shown in Fig. (2) for more details and understanding of how CNN works.

Proposed model methodology.

CNN architecture.

Once the data is optimized, it traverses through multiple Convolutional Layers. These layers are quintessential for feature extraction. Essentially, they move over the data using filters, detecting patterns or features that are vital for prediction. For instance, if the data suggests a gradual increase in glucose levels over time, the convolutional layer would recognize this pattern as indicative of a potential diabetic condition. However, as the data progresses, its volume can become a computational challenge. This is where the Pooling Layers step in. By reducing the dimensionality of the data, these layers ensure that the computational efficiency of the network is maintained. While they compress the data, they do so while retaining the most critical features extracted by the convolutional layers. The Fully Connected Layer is the penultimate stage of the process. It is a dense layer, meaning every neuron in this layer is connected to every neuron in the preceding and following layers. It interprets the features extracted and patterns recognized by the preceding layers, processing them for the final prediction. Finally, the Output Layer produces the crux of the model's purpose: the prediction result. Given our context, it classifies the data into potential categories, such as “Normal” or “Abnormal,” signaling the presence or absence of a chronic disease.

In this study, disease prediction is approached through the integration of advanced technological methodologies, specifically the use of Convolutional Neural Networks (CNNs) and the Internet of Things (IoT). The study demonstrates how CNNs can effectively identify and classify complex patterns within medical data, which are crucial for the early detection of chronic diseases such as those affecting the brain and liver. By employing a deep learning architecture, CNNs learn to decipher subtle nuances in medical imagery that might elude traditional diagnostic techniques. Concurrently, the IoT framework enhances this predictive capability by enabling continuous real-time data collection and analysis. This dual approach allows for a comprehensive monitoring system that can predict disease onset earlier than conventional methods, significantly improving the potential for timely and effective interventions. The synergy between CNNs and IoT not only underscores the study's innovative approach to disease prediction but also sets a new benchmark in the proactive management of chronic conditions.

This model is designed to enhance the predictive capabilities in healthcare, particularly for chronic diseases, by utilizing advanced AI techniques. Here's a detailed breakdown of the model's components and functions:

4.1. Input Layer

Receives sensor data from IoT devices. This is the initial point of data collection, where diverse types of health-related data are ingested into the model.

4.2. EO Optimization Layer

Utilizes Evolutionary Optimization (EO) techniques to fine-tune network weights. This layer aims to enhance the model's predictive capabilities by optimizing the network parameters based on principles similar to natural selection, ensuring that the model adapts and evolves to provide the most accurate predictions.

4.3. Convolutional Layers

Composed of multiple layers designed for feature extraction. These layers process the ingested data to identify relevant patterns and features essential for accurate disease prediction.

4.4. Pooling Layers

Serve to reduce the dimensionality of the data. By compressing the data while retaining important features, these layers help maintain computational efficiency and ensure that the model can handle large volumes of data without a loss in performance.

4.5. Fully Connected Layer

A dense layer where all neurons are interconnected. It plays a crucial role in classification and prediction by processing the features and patterns extracted by the convolutional layers.

4.6. Output Layer

Outputs the final prediction result, categorizing the data as either “Normal” or “Abnormal” to indicate the presence or absence of a chronic disease.

4.7. Model Methodology and Application

• The EO-LWAMCNet model is adept at handling large influxes of data from IoT sensors and is specifically tailored to meet the challenges of chronic disease prediction. Its architecture is optimized to process data efficiently, extract meaningful patterns, and make reliable predictions even in cases where disease symptoms may be subtle or ambiguous.

• The use of Evolutionary Optimization is particularly significant as it helps the model avoid the pitfalls of traditional neural network training methods, such as getting stuck in local optima. This ensures that the model remains robust and adaptive to new data patterns, which is crucial in the dynamic field of healthcare.

• The model is poised to make significant impacts in remote healthcare monitoring by providing accurate predictions in real-time, making it highly suitable for applications where timely and reliable disease detection is crucial.

This sophisticated model exemplifies how AI can revolutionize healthcare by enhancing diagnostic accuracy and efficiency, ultimately aiming to improve patient outcomes by leveraging cutting-edge technologies in a practical, impactful manner.

In summary, the EO-LWAMCNet model is a culmination of advanced optimization techniques and the foundational principles of convolutional neural networks. With its ability to ingest diverse data, recognize intricate patterns, and make accurate predictions, it stands as a testament to the potential of AI in revolutionizing healthcare predictions. This model not only addresses the challenges presented by chronic diseases but also uses computational efficiency, making it suitable for real-time applications, especially when paired with the vast data streams from IoT devices in healthcare.

5. DATASET: CHRONIC LIVER DISEASE (CLD) AND BRAIN DISEASE (BD) DATASETS

In our work, we utilized the Chronic Liver Disease (CLD) dataset and the Brain Disease (BD) dataset. These datasets were chosen for their relevance to our research objectives and their availability of comprehensive data for analysis. As for the environment, we employed Jupiter and Google Colab as a platform for programming, and python programming used and scikit learn, Opencv library used for data processing and analysis, ensuring robustness and efficiency in our methodology.

In Table 3, Chronic Liver Disease (CLD) dataset provides a holistic view of parameters that are typically indicative of liver health. For instance:

| Patient ID | Bilirubin Level (mg/dL) | Albumin Level (g/dL) | Prothrombin Time (seconds) |

|---|---|---|---|

| 1001 | 1.5 | 3.5 | 14 |

| 1002 | 0.8 | 4.2 | 13 |

| 1003 | 2.3 | 2.9 | 16 |

5.3. Prothrombin Time

A prolonged prothrombin time can be indicative of liver damage.

• The tabulated data showcases three different patients with varied symptoms. Patient 1001, for instance, with elevated bilirubin, reduced albumin, and a prolonged prothrombin time, has been diagnosed with Cirrhosis.

On the other hand, in Table 4, the Brain Disease (BD) dataset focuses on parameters that provide insights into neurological health. These parameters include:

| Patient ID | MRI Result | Cognitive Test Score | Neurotransmitter Levels (ng/mL) |

|---|---|---|---|

| 2001 | Abnormal | 25 | 65 |

| 2002 | Normal | 50 | 80 |

| 2003 | Mildly Abnormal | 30 | 70 |

5.6. Neurotransmitter Levels

Imbalanced levels can be indicative of various neurological disorders.

• From our BD dataset, Patient 2001's abnormal MRI results, coupled with a lower cognitive test score and imbalanced neurotransmitter levels, led to a diagnosis of Alzheimer's disease.

Both datasets play a pivotal role in training the EO-LWAMCNet model. They provide the model with a broad spectrum of patient profiles, symptoms, and eventual diagnoses. This diversity ensures that the model is well-equipped to recognize patterns and make predictions on new, unseen data. The varied cases, from normal to different stages of diseases, ensure that the model can differentiate and classify potential health conditions with a high degree of accuracy. By feeding such comprehensive datasets into the model, we leverage real-world patient data to build a predictive tool that can potentially revolutionize the early detection of chronic liver and brain diseases.

6. RESULTS AND DISCUSSION

Training a machine learning model is analogous to teaching a student. The model, like the student, is exposed to a variety of problems and their solutions (in the form of training data). Through this exposure, it learns to generalize from the patterns it sees, aiming to apply this knowledge effectively to unseen problems in the future.

The core of the training stage for the EO-LWAMCNet model involves a backpropagation algorithm coupled with Evolutionary Optimization (EO) for weight updates. The backpropagation algorithm uses the principle of gradient descent. Given an input, the model makes a prediction, and the error between this prediction and the true output is calculated using a loss function, L. Mathematically, for a simple mean squared error loss function:

L =N1 ∑ i= 1N (yi −y^ i)^2

Using this error, the gradient descent algorithm updates the model's weights in the direction that minimizes this error. The model's weights are adjusted iteratively using the formula:

wnew = wold −α∇L

While backpropagation provides a direction for weight updates, the EO further refines this process, ensuring that the model doesn't get stuck in local optima and finds a more global optimal solution. For the data, the raw dataset is divided into training and validation sets. Typically, a common split might be 80% of the data for training and 20% for validation. The training data is used to teach the model, while the validation data is used to tune hyperparameters and prevent overfitting. Overfitting occurs when the model performs exceptionally well on the training data but poorly on unseen data, implying it hasn't generalized well but has rather memorized the training data. In addition to the data split, techniques such as k-fold cross-validation can be applied. Here, the training data is divided into k subsets. The model is trained k times, each time using k-1 subsets for training and the remaining subset for validation. This process enhances the robustness of the model, ensuring that it is exposed to diverse training and validation combinations. Post-training, the model undergoes the testing phase. Here's where the cloud server's sensor data plays a pivotal role. The cloud server aggregates the real-time data transmitted from various IoT sensors embedded in patients. This aggregated data, which has not been exposed to the model during its training phase, is input into the now-trained EO-LWAMCNet model. The model evaluates this data, classifying it into potential categories like “Normal” or “Abnormal”. The beauty of leveraging cloud servers is the vast computational power they offer, making the evaluation process swift. Post-evaluation, the results are compared to the actual outcomes (if known) to calculate the model's performance metrics, such as accuracy, precision, recall, and F1-score. In essence, the training and testing stages of the EO-LWAMCNet model involve a harmonious blend of mathematical optimization techniques, rigorous validation strategies, and the computational prowess of cloud servers. Together, these elements contribute to the creation of a predictive tool that is not only accurate but also scalable and efficient in real-world scenarios.

The classification process, at its essence, is akin to a well-conducted symphony, wherein various instruments (or steps) come together harmoniously to produce a final, discernible output. In the case of our EO-LWAMCNet model, the ultimate goal is to categorize incoming sensor data into either “abnormal” or “normal” health conditions.

As sensor data streams into the system, the first step is its ingestion is displayed in Table 5. This involves collecting, organizing, and feeding the data into the EO-LWAMCNet model. Given the real-time nature of healthcare IoT, data ingestion often happens in near real-time. Even before delving into classification, it is crucial to understand the nature and significance of the data at hand. Feature extraction involves identifying and isolating the most pertinent pieces of information or features from the raw sensor data that would be most indicative of a patient's health condition. Once the features are extracted and processed, they're fed into the trained EO-LWAMCNet model. Drawing upon its training, the model assesses these features and renders a prediction. Leveraging its intricate architecture and the patterns it has learned from historical data, the model classifies the data into either “abnormal” or “normal.” Classification often hinges on threshold values. For instance, while a continuous output might suggest a 75% likelihood of an abnormal condition, a threshold (e.g., 50%) determines the final binary classification. If the likelihood exceeds this threshold, the prediction is marked as “abnormal.” Otherwise, it's “normal.” Post-prediction, the results are tabulated, as seen above. Each patient's details, the relevant sensor data, and the model's prediction are compiled, providing a comprehensive view of their health status.

| Patient ID | Sensor Data (Feature1) | Sensor Data (Feature2) | Predicted Classification |

|---|---|---|---|

| A123 | 1.6 | 3.5 | Abnormal |

| B456 | 0.9 | 4.3 | Normal |

| C789 | 2.1 | 3.0 | Abnormal |

The relevance and importance of swift classification in healthcare are paramount. Chronic conditions, if identified early, can often be managed or even reversed with timely interventions. Delays can exacerbate health issues, leading to complications, reduced quality of life, and increased healthcare costs.

Moreover, in critical scenarios, every second counts. Consider a patient with a cardiac issue; real-time data might reveal irregular heart rhythms, necessitating immediate medical attention. A swift classification, in this case, can quite literally be the difference between life and death. In conclusion, the classification process in the EO-LWAMCNet model is a meticulously designed journey from raw, real-time data to actionable health insights. By swiftly and accurately categorizing data, this process plays a pivotal role in enhancing patient outcomes, optimizing healthcare interventions, and potentially saving lives.

Performance evaluation in machine learning serves as a comprehensive assessment of how well a model performs its intended task. It involves various metrics that go beyond accuracy, offering a more nuanced understanding of a model's strengths and limitations. In the case of the EO-LWAMCNet model, evaluating its performance on both the Chronic Liver Disease (CLD) and Brain Disease (BD) datasets involves an array of metrics, each shedding light on different aspects of its predictive capability.

As illustrated in Figs. (3 and 4), accuracy is defined as the fraction of correct forecasts among all predictions. The model successfully categorizes 94.8% of the samples in the CLD dataset, obtaining a 94.8% accuracy grade. The accuracy of 95% for the BD dataset demonstrates the model's capacity to recognize neurological disorders. Precision is the proportion of accurately predicted positive outcomes that include “abnormal” cases that were detected correctly (correct positive forecasts). Because of its precision of 95.2%, when CLD labels something as “abnormal,” it often does so with 95.2% accuracy. The model's 94.7% accuracy in recognising “abnormal” neurological illnesses in the BD dataset demonstrates its effectiveness. The percentage of correct positive forecasts among all instances of true positivity is referred to as recall, also known as sensitivity. The model correctly detected 94.0% of the real “abnormal” cases in the CLD dataset or recall. According to the recall rate for BD, 95.3% of the neurological disorders identified by the model were “abnormal.”

Performance metrics.

Miss rate values.

Performance comparison- CLD.

The harmonic mean of recall and precision is used to generate the F1 score. It provides an accurate evaluation of a model's performance. CLD receives an F1 score of 94.6%, whereas BD receives a score of 95.0%. These findings demonstrate that the model successfully strikes a compromise between precision and recall. The percentage of “abnormal” cases that the model incorrectly labels as “normal” is known as the miss rate or false negative rate. The model misses 6.0% of instances of “abnormal” liver function, according to the CLD miss rate. The 4.7% miss rate in BD demonstrates the model's ability to reduce false negatives in neurological predictions. These performance metrics give a thorough grasp of the EO-LWAMCNet model's capabilities. They stand for the model's capacity to identify “abnormal” instances, identify instances of “abnormality” that actually occur (recall), and balance precision-recall (F1 score).

Additionally, the miss rate highlights the model's potential for minimizing false negatives, a critical aspect in healthcare where missing a disease diagnosis can have significant consequences. Overall, these metrics collectively demonstrate the model's robustness and reliability in the context of healthcare predictions.

To contextualize the prowess of the EO-LWAMCNet model, it's vital to benchmark its performance against existing models. This comparison not only places the model's achievements in perspective but also provides insights into where it stands in the broader landscape of healthcare predictive modeling.

Figs. (4 and 5) provide a performance comparison of the EO-LWAMCNet with two other commonly used machine learning models in the realm of healthcare: Deep Neural Network (DNN) and Support Vector Machine (SVM).

In Fig. (5), for the CLD dataset: EO-LWAMCNet retains its leading position with an accuracy of 94.8%. Its precision, recall, and F1-Score also reflect its well-rounded performance in predicting liver diseases. The DNN (Model A), designed with multiple layers, achieves an accuracy of 91.5%. Even though deep learning models have shown promise in various domains, in this context, they lag behind the EO-LWAMCNet, but their performance still remains commendable. The SVM (Model B), a classical machine learning approach designed to find hyperplanes that best separate the classes, achieves an accuracy of 92.8%. Its precision, recall, and F1-Score suggest a slightly better performance than the DNN, showcasing SVM's potential effectiveness in handling the dataset's intricacies. In Fig. (6) for the BD dataset: EO-LWAMCNet exhibits an accuracy of 95%, indicating its consistent performance across varied datasets. DNN (Model A) achieves an accuracy of 92.6%. The architecture's deep layers allow it to capture the complexities of brain-related data to some extent but still fall short compared to EO-LWAMCNet. SVM (Model B) shows an accuracy of 93.9%. In the context of the BD dataset, SVM demonstrates its versatility in dealing with different kinds of data, making it a noteworthy contender. While the EO-LWAMCNet emerges as a frontrunner, the performances of DNN and SVM highlight the landscape's richness. Each method, with its unique approach, brings something valuable to the figure. The choice often boils down to the nature of the data, the problem specifics, and the computational resources available. Yet, through this comparison, it's evident that innovations like EO-LWAMCNet push the boundaries, setting new benchmarks for predictive healthcare modeling.

Performance comparison- BD.

The EO-LWAMCNet model's stellar performance, as showcased by the results, signifies a marked advancement in the field of predictive healthcare. With accuracies hovering around the mid-90s for both Chronic Liver Disease (CLD) and Brain Disease (BD) datasets, the model emerges not merely as a theoretical novelty but as a practical tool with transformative potential. Firstly, the results underscore the model's reliability. In healthcare, where stakes are immensely high, the margin for error is incredibly thin. An accuracy of 94.8% for CLD and 95% for BD means that in almost 95 out of 100 cases, the model can discern with precision whether a patient's condition is normal or indicative of a chronic ailment. This high level of certainty is paramount when dealing with real-life clinical scenarios where misdiagnoses can lead to grave consequences. Furthermore, while accuracy is imperative, other metrics, including precision, recall, and F1-score, shed light on the model's holistic performance. The balance between precision (the model's correctness when it predicts 'abnormal') and recall (its effectiveness in capturing all 'abnormal' cases) speaks volumes about its utility. High precision ensures doctors aren't inundated with false alarms, while a robust recall ensures that genuine cases don't go unnoticed. For doctors and healthcare providers, these results are game-changing.

The foremost implication is the potential for early disease detection. Chronic conditions, whether related to the liver or the brain, often have an insidious onset, manifesting clinically only when they're considerably advanced. With a tool like EO-LWAMCNet, doctors can potentially identify and intervene early, drastically improving patient prognosis. For conditions where early therapeutic interventions can halt or even reverse disease progression, this could make the difference between full recovery and chronic morbidity or even mortality. In addition, the model's performance can be a significant asset in resource-constrained settings. In regions where specialist doctors are scarce, such a reliable AI tool can act as a preliminary screener, identifying high-risk patients who need immediate attention. It can help optimize the allocation of medical resources, ensuring that those in dire need receive prompt care. Moreover, in today's digital age, where telemedicine is becoming increasingly prevalent, a model like EO-LWAMCNet can seamlessly integrate into remote healthcare platforms. Patients in distant locations, without immediate access to healthcare facilities, can benefit from real-time feedback on their health status. Should the model detect an anomaly, they could be advised to seek in-person medical evaluation, bridging the gap between remote living and quality healthcare. However, while the results are promising, they also come with an implied responsibility. The very strength of the model—its ability to make accurate predictions—also makes it crucial for it to be used judiciously. False positives, though minimal, can lead to unnecessary medical interventions, and the rarer false negatives could lead to missed diagnoses. As with all tools, it's vital for healthcare providers to use the model as an adjunct to their clinical acumen and not a replacement.

CONCLUSION

The introduction of the EO-LWAMCNet model marks a significant stride in the realm of predictive healthcare. With stellar performance metrics, achieving accuracies of 94.8% for the Chronic Liver Disease (CLD) dataset and 95% for the Brain Disease (BD) dataset, the model stands as a testament to the harmonious melding of advanced computational techniques and medical science. Beyond mere numbers, these results signify a reliable tool capable of aiding early disease detection, an imperative in managing chronic conditions where timely interventions can drastically alter outcomes. Furthermore, its high precision and recall values highlight its utility in real-world clinical scenarios, ensuring minimal false alarms while capturing genuine cases effectively. For healthcare professionals, especially in resource-constrained environments, the model emerges as a beacon of hope, providing a preliminary screening tool that can identify high-risk patients. As we navigate the rapidly evolving landscape of digital health, tools like EO-LWAMCNet serve as harbingers of a future where data-driven insights augment clinical expertise, collectively enhancing patient care. While the journey of integrating AI into healthcare is ongoing, the EO-LWAMCNet's results underscore the immense potential this synergy holds.

AUTHORS' CONTRIBUTIONS

It is hereby acknowledged that all authors have accepted responsibility for the manuscript's content and consented to its submission. They have meticulously reviewed all results and unanimously approved the final version of the manuscript.