All published articles of this journal are available on ScienceDirect.

Utilizing Multi-layer Perceptron for Esophageal Cancer Classification Through Machine Learning Methods

Abstract

Aims

This research paper aims to check the effectiveness of a variety of machine learning models in classifying esophageal cancer through MRI scans. The current study encompasses Convolutional Neural Network (CNN), K-Nearest Neighbor (KNN), Recurrent Neural Network (RNN), and Visual Geometry Group 16 (VGG16), among others which are elaborated in this paper. This paper aims to identify the most accurate model to facilitate increased, improved diagnostic accuracy to revolutionize early detection methods for this dreadful disease. The ultimate goal is, therefore, to improve the clinical practice performance and its results with advanced machine learning techniques in medical diagnosis.

Background

Esophageal cancer poses a critical problem for medical oncologists since its pathology is quite complex, and the death rate is exceptionally high. Proper early detection is essential for effective treatment and improved survival. The results are positive, but the conventional diagnostic methods are not sensitive and have low specificity. Recent progress in machine learning methods brings a new possibility to high sensitivity and specificity in the diagnosis. This paper explores the potentiality of different machine-learning models in classifying esophageal cancer through MRI scans to complement the constraints of the traditional diagnostics approach.

Objective

This study is aimed at verifying whether CNN, KNN, RNN, and VGG16, amongst other advanced machine learning models, are effective in correctly classifying esophageal cancer from MRI scans. This review aims at establishing the diagnostic accuracy of all these models, with the best among all. It plays a role in developing early detection mechanisms that increase patient outcome confidence in the clinical setting.

Methods

This study applies the approach of comparative analysis by using four unique machine learning models to classify esophageal cancer from MRI scans. This was made possible through the intensive training and validation of the model using a standardized set of MRI data. The model’s effectiveness was assessed using performance evaluation metrics, which included accuracy, precision, recall, and F1 score.

Results

In classifying esophageal cancers from MRI scans, the current study found VGG16 to be an adequate model, with a high accuracy of 96.66%. CNN took the second position, with an accuracy of 94.5%, showing efficient results for spatial pattern recognition. The model of KNN and RNN also showed commendable performance, with accuracies of 91.44% and 88.97%, respectively, portraying their strengths in proximity-based learning and handling sequential data. These findings underline the potential to add significant value to the processes of esophageal cancer diagnosis using machine learning models.

Conclusion

The study concluded that machine learning techniques, mainly VGG16 and CNN, had a high potential for escalated diagnostic precision in classifying esophageal cancer from MRI imaging. VGG16 showed great accuracy, while CNN displayed advanced spatial detection, followed by KNN and RNN. Thus, the results set new opportunities for introducing advanced computational models to the clinics, which might transform strategies for early detection to improve patient-centered outcomes in oncology.

1. INTRODUCTION

Nowadays, esophageal cancer is a major problem in oncology that calls for creative methods for prompt diagnosis and categorization. Esophageal cancer is the seventh most common cause of cancer-related deaths worldwide, with an increasing incidence rate [1, 2]. Not only is esophageal cancer common, but it also has several subtypes, such as squamous cell carcinoma and adeno- carcinoma, each requiring a different diagnostic and treatment plan. Since advanced stages of esophageal cancer frequently have fewer treatment choices and worse survival rates, early identification is essential to improving patient outcomes. Sadly, esophageal cancer symptoms can not become apparent until the illness has advanced, underscoring the urgent need for reliable categorization techniques that could detect the illness at an early stage.

While endoscopy and biopsy are essential methods for detecting esophageal cancer, they are also invasive, resource-intensive, and may not be available to all populations. This calls for the investigation of readily available, non-invasive alternatives where machine learning methods become useful. Our goal is to apply computational methods, namely the Multi-Layer Perceptron (MLP) technique, to create a classification model that can distinguish between various stages and subtypes of esophageal cancer using a variety of data sources. This work presents a viable path to increase diagnostic accuracy and enable prompt intervention by addressing the current barriers to esophageal cancer early detection [3, 4].

Beyond this specific medical case, this revelation has consequences for healthcare. The creation of an executable classification model for esophageal cancer might serve as a model for similar projects in the field of cancer research. In addition, the use of machine learning methods for cancer detection fits in nicely with the larger paradigm shift towards precision medicine, which customizes treatment plans based on individual patient profiles. As a result, this study advances the general objective of changing healthcare procedures toward more individualized and efficient treatments in addition to helping to fix a particular medical condition [5, 6].

The first aim is to construct and utilize a reliable MLP classification model for esophageal cancer. The secondary objective of this study is model performance evaluation. These are the primary aims of the conducted research. Furthermore, to build this model, it will be necessary to carefully look at several data sets involving data from biology, medicine, and imaging. Likewise, by integrating these multiple sources of information, we aim to get a comprehensive and accurate view of the varied presentations of esophageal cancers, which will help in obtaining precise categorization of the problem at hand. The model further seeks to enhance the MLP model so that it gives higher accuracy and is more widely applicable to many patient categories. Moreover, because the literature further identifies that esophageal cancer presentations are entirely distinctive, a model that can adjust according to variations in population compositions and geographic predispositions is needed. The research also examines how the MLP model could be implemented in prognostic analyses that can enable doctors to intervene with their patients based on forecasted outcomes of how their health would change over time.

Additionally, due to the reasons mentioned above, such as internal complexity and diversity of esophageal cancer, machine learning techniques are adopted to classify the sickness. Since the anatomy is very complex, esophageal cancer may not be easy to detect, especially in the early stages when symptoms are negligible or non existent. The more recent MLP model used in this study works on a more data-driven approach toward the complexity of patterns within large and varied datasets, which usually falls out of the conventionally used diagnostic procedures. Machine learning models are driven, in most parts, by their capacity to adapt and learn with time so that they can steadily increase the accuracy with which their diagnosis is being made. This flexibility is essential, particularly for cancer diagnostics, where datasets are constantly increasing in volume due to the changing views of medicine and, on the other hand, due to the rapidly advancing technology.

In our study, we integrate Explainable Artificial Intelligence (XAI) to enhance the transparency and interpretability of our AI models, ensuring that the decision-making processes are understandable and accessible to clinicians and researchers. This integration is essential for building trust, improving diagnostic accuracy, ensuring accountability, and facilitating error analysis, thereby contributing to more reliable and effective healthcare solutions.

XAI plays a crucial role in enhancing the transparency, trustworthiness, and usability of AI models, particularly in the healthcare domain. In addition, by providing clear and understandable explanations of AI decision-making processes, XAI helps clinicians and researchers better understand how and why certain predictions or classifications are made. This is particularly important in medical diagnostics, where understanding the rationale behind a model's decision can aid in gaining clinician trust, ensuring patient safety, and improving clinical outcomes.

Incorporating XAI into our study allows for the following:

1.1. Enhanced Trust

Clinicians are more likely to trust and adopt AI tools if they can understand the underlying decision-making process.

1.2. Improved Diagnostics

Explainable models can highlight critical features and patterns in the data that may be overlooked by humans, leading to more accurate and insightful diagnostics.

1.3. Accountability

XAI ensures accountability by providing traceable explanations for each decision, which is essential for ethical and legal compliance in healthcare.

1.4. Error Analysis

Understanding model decisions helps in identifying and correcting potential errors or biases in the AI system, leading to continuous improvement.

This study aims to provide a dynamic and ever-evolving tool that remains at the forefront of the categorizations of esophageal cancer using the learning capabilities of machine learning.

2. LITERATURE REVIEW

The number of challenging studies to diagnose esophageal cancer focus on the novel approaches. Along this line, an increasing corpus of research has been examining how using machine learning techniques augments the diagnosis accuracy of esophageal cancer [7, 8]. Research has shown that automating classification between cancerous and non-cancerous conditions gives promising results with computer algorithms, such as Support Vector Machines (SVM), Neural Networks, and Decision Trees. The many data sources for this classification include histopathological and endoscopic images, among others [9-11]. There are, however, still several gaps in the existing body of research. One of the major concerns is the constantly increasing need for non-invasive, widely scalable, and accurate diagnostics. Furthermore, because of the heterogeneity of esophageal cancer, which has various subtypes and stages, it becomes a unique challenge for the advanced models to capture even the smallest of the imaging data's characteristics. Well-defined methodologies and large datasets are often missing from current research, which makes it more challenging to replicate and generalize findings [12-14].

This article aims to fill these gaps by applying a Multi-layer Perceptron technique on a meticulously curated database of 1844 MRI images. The non-invasive, all-pervasive imaging technology was magnetic resonance imaging, which is useful in treating structural and functional aspects of esophageal issues. The MLP model intensifies the categorization of esophageal cancer, and it is already notoriously known for its capacity for the identification of complex patterns in data. This work is, to the best of our knowledge, the first to offer novel insights into the implementation of machine learning methods and contribute to the current body of information on the issue concerning esophageal cancer detection [15, 16].

In recent years, the application of machine learning (ML) techniques in the field of oncology has shown promising results, particularly in the classification and diagnosis of various types of cancer, including esophageal cancer. Among the diverse array of machine learning models, the Multi-Layer Perceptron (MLP), a class of feedforward artificial neural network (ANN), has garnered significant attention due to its ability to learn and model non-linear and complex relationships [17].

Explainable Artificial Intelligence (XAI) has emerged as a critical area of research in recent years, focusing on making AI models more transparent and interpretable. XAI techniques aim to provide clear explanations for the decision making processes of complex machine learning models, which is particularly important in high-stakes domains like healthcare. Studies have shown that XAI not only enhances trust and accountability among users but also facilitates better error analysis and model debugging. In the context of medical diagnostics, XAI can help clinicians understand the rationale behind AI-driven predictions, thus improving clinical decision-making and patient outcomes. Various approaches, including model-agnostic methods and interpretable model architectures, have been proposed to achieve these goals, highlighting the growing importance of XAI in ensuring the reliability and ethical use of AI systems [18].

A substantial body of research has focused on enhancing the diagnostic accuracy of esophageal cancer using MLPs. A research [19] explored the capabilities of MLPs in distinguishing between benign and malignant esophageal tumors, demonstrating that MLPs could effectively learn from endoscopic images with a high degree of accuracy. The study emphasized the model's proficiency in feature extraction and classification, which are crucial for accurate diagnosis.

In addition, another research study [20, 21], conducted a comparative study where MLPs were evaluated against other traditional machine learning algorithms like Support Vector Machines (SVM) and Random Forests (RF). The MLP outperformed other models in terms of precision and recall, highlighting its potential in handling the complexities associated with the histopathological data of esophageal cancer.

Additionally, recent advancements in deep learning have led to the integration of convolutional neural networks (CNNs) with MLPs to improve feature detection and classification accuracy. A few other studies [22, 23] developed a hybrid model that combines CNNs for feature extraction and MLPs for classification. This hybrid model addressed the limitations of MLP's dependency on handcrafted features and showcased an improved performance in detecting early-stage esophageal cancer.

The application of MLPs in esophageal cancer classification is challenging. Issues related to overfitting, the need for extensive data for training, and the interpretability of the model remain significant concerns. However, innovative solutions such as the incorporation of dropout techniques and the use of transfer learning are being explored to mitigate these challenges [24, 25].

Overall, the literature indicates that while MLPs present a viable solution for esophageal cancer classification, ongoing research is crucial to refine these models further. The future of MLPs in medical diagnostics looks promising, with potential improvements in computational methodologies and data handling strategies poised to enhance their diagnostic capabilities significantly.

3. METHOD

3.1. Data Collection

A large dataset with 1844 MRI images of the esophagus was collected systematically for this study. We would like to clarify that the dataset used in this study was obtained from a publicly accessible repository on Kaggle. The specific dataset we utilized is the “Esophageal Endoscopy Images” (available at: https://www.kaggle.com/ datasets/chopinforest/esophageal-endoscopy-images). We would like to clarify that our team did not collect the data used in our study from clinical trials. As this data was obtained from an open-access repository and not collected through clinical trials, ethical clearance from our institution was not required. This collection involves images of both cancerous and non-cancerous issues with the esophagus, giving a thorough understanding of the disease. Classifying the photos is performed by training the MLP model with its data by the images in the dataset. The present article demonstrates a careful method of data partitioning to ensure that the training phase is performed correctly and that the model is generalizable and robust. Exactly 553 images, accounting for 30%, are put aside for training purposes. From within this subset, the MLP model is trained to identify patterns that are suggestive of esophageal cancer.

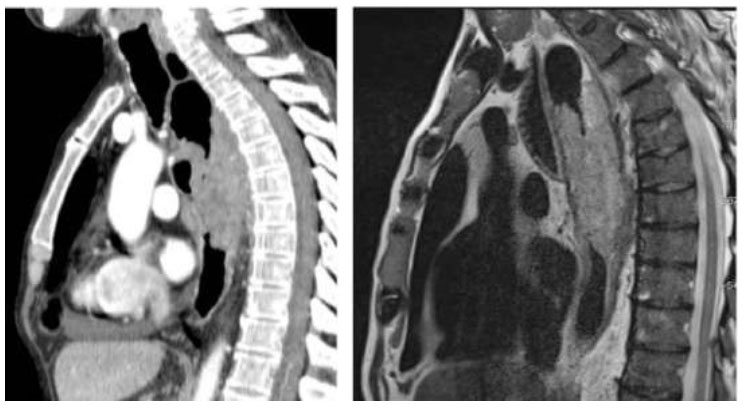

The remaining 1291 images, thirty percent of the total, are still held out for testing. This particular set of photos acts as a rigorous test set, meaning that we are measuring model performance on untested data while also ensuring working applications in the real world. The sample image used in this research is presented in Fig. (1). Fig. (1) depicts a side-by-side comparison of esophageal endoscopy images. The left image shows a CT scan, while the right image displays an MRI scan. Both images illustrate the esophagus and surrounding structures, highlighting the areas of interest for detecting esophageal cancer.

The CT scan (left) provides detailed cross-sectional images of the esophagus, allowing for the assessment of structural abnormalities and the detection of potential cancerous lesions. The MRI scan (right) offers superior soft tissue contrast, which is crucial for distinguishing between different types of tissue and identifying malignant growths. In our study, these imaging modalities are utilized to train and evaluate various machine learning models, including VGG16, CNN, KNN, and RNN, to improve the accuracy and efficiency of esophageal cancer diagnosis. Furthermore, by leveraging the strengths of both CT and MRI images, our approach aims to enhance early detection and provide valuable diagnostic insights to medical professionals.

Sample MRI image used in this research.

Our study, therefore, prepared data values with care and then developed an effective classification model of esophageal cancer based on MRI images using the Multi-layer Perceptron technique. The main objective of this crucial step is to enhance the quality and relevance of the dataset so that the MLP model can be in a position to derive meaningful features and patterns for correct classification.

3.1.1. Cleaning

A comprehensive cleaning operation is the first step in the data preparation process, which concerns fixing all errors, mistakes, and irregularities in the MRI dataset. This process involves identifying and dropping any inaccurate or missing images that may otherwise compromise the training process in the model. Techniques that reduce noise are applied to minimize the artifacts that may have occurred at the stage of data collection. Thorough cleansing is required to ensure that introduced biases or errors are minimized while building the model for a robust and reliable categorization system.

3.1.2. Normalization

This crucial processing step comes just after the MRI images have been created. In this way, it ensures that the pixel values in several photos do not differ, even with the insurmountable difference in equipment and in settings for the imaging of different photographs. This step also ensures that features will not dominate in the model training simply because they have diverse intensities. Therefore, it is an essential factor in achieving homogeneity of the feature scale. Normalization improves the convergence behavior during training and generali- zation across imaging sources, which becomes critical for practical use in a clinical setting of the MLP model.

3.1.3. Feature Selection

On the other hand, since MRI data is very high in dimensionality, a careful selection of features has to be performed to ensure that performance is optimized to help identify esophageal cancer. In this process, the dataset is extensively analyzed to identify features that vastly decrease the model's discriminating capacity, and, as a result, they are removed. Consequently, the tendency to overfit and process overhead is reduced, enabling an MLP model to concentrate on essential characteristics for accurate classification. The feature selection procedure is complex and requires a deep understanding of the biology of esophageal cancer as well as the dataset. The chosen traits should capture the essential intricacies of both malignant and non-cancerous illnesses, and they should be resistant to noise and minute alterations.

Table 1 lists the salient features of an MRI scan together with the corresponding relevance ratings to show how the criteria were selected for the categorization of esophageal cancer. The model's capacity to discriminate is greatly enhanced by spatial co-occurrence, wavelet transform, texture entropy, intensity histogram, and other characteristics with high relevance scores. Features such as Edge Detection and Gradient Magnitude are excluded because of their lower score. Furthermore, to accurately and consistently identify esophageal cancer using the Multi-layer Perceptron (MLP) approach, one must comprehend the enhanced feature set. This understanding is aided by the details on the attributes selected for the model provided in the “Selected” column.

| Feature Name | Importance Score | Selected |

|---|---|---|

| Intensity Histogram | 0.87 | Yes |

| Texture Entropy | 0.62 | Yes |

| Edge Detection | 0.45 | No |

| Spatial Co-occurrence | 0.78 | Yes |

| Gradient Magnitude | 0.56 | No |

| Wavelet Transform | 0.91 | Yes |

| Shape Descriptors | 0.73 | Yes |

Our study helps enhance the detection of esophageal cancer by predicting the response from MRI images using various machine-learning methodologies. The tools in the tool shed for this purpose include a pre-trained VGG16 model, convolutional neural networks (CNN), K-Nearest Neighbors (KNN), and Naive Bayes (NB). Explicitly, all the unique advantages that go into the prediction project underline the adaptability this needs to tap into the intricacies in question regarding the categorization of esophageal cancer.

3.2. Convolutional Neural Network

Those endowed, in this case, by the potent capabilities of Convolutional Neural Networks (CNN), especially in the accurate prediction of esophageal cancer, have been leveraged. In our CNN, the architecture is built carefully to be interpretable to the extent of being able to infer the intricate spatial patterns in the MRI scans, which are essential in the process of differentiating between cancerous and non-cancerous conditions. Every layer is a crucial part of the CNN structure. The first layers to carefully convolve over the input MRI images are called convolutional layers. These layers function as feature detectors because they can identify small-scale patterns like edges, textures, and shapes. Then, when the pooling layers downsample the spatial dimensions, the most prominent features are retained. A hierarchical representation of the features is created as the convo- lutional and pooling layers are stacked, enabling the model to detect progressively more complex patterns as it descends [26, 27].

Fully connected layers then combine the gathered features to simplify the process of turning complex spatial relationships into predictive insights. The final layerwhich is usually a softmax layer,assigns probabilities to the classes so that the model can make predictions accordingly. The intentional inclusion of batch normali- zation and dropout layers enhances training stability and prevents overfitting, respectively. Gradient descent and backpropagation are used by CNN to optimize the model's weights during training. Our dataset is divided into two parts: 70% is used for training MRI scans, and 30% is used for independent testing set evaluation.

Notably, CNN could not have been created without transfer learning. In order to obtain generalized features relevant to a range of image recognition applications, the model is trained on a large dataset unrelated to esophageal cancer using a pre-trained CNN architecture such as ResNet or VGG16. Next, we use our esophageal cancer dataset to modify the model to account for the unique characteristics of our specific domain.

3.3. VGG 16

Our comprehensive approach to esophageal cancer prediction leverages the power of VGG16, a pre-trained deep-learning model with a strong track record of image recognition performance. The Visual Geometry Group at Oxford University developed VGG16, which is distinguished by uniform convolutional kernel sizes and depth. This architecture's consistency facilitates model interpretation and implementation. Thirteen convolutional layers and three fully connected layers make up the 16 weight layers of the VGG16 model. Furthermore, by stacking several non-linear transformations, the convolutional layers, which have tiny 3x3 convolutional filters allow the network to learn intricate patterns. The layers in between are referred to as max-pooling layers because they downsample spatial dimensions while keeping important characteristics. The last fully connected layers facilitate higher-order reasoning by aggregating learned features [28].

In our work, we classify esophageal cancer using VGG16 in conjunction with a transfer learning strategy. The model learns common image features with the assistance of pre-trained weights from several datasets, most notably ImageNet. We then refine the modified VGG16 model to extract pertinent features that are unique to our field using our dataset on esophageal cancer. The transfer learning approach uses the large data set of the pre-trained model to address the lack of information on esophageal cancer. This improves the model's capacity to generalize across a range of esophageal conditions and speeds up convergence during training [29].

3.4. K-Nearest Neighbors (KNN)

In our works, the results from an investigation of several techniques applied to the machine learning classification model for esophageal cancer, the K-Nearest Neighbors (KNN) algorithm, is one of the most critical applications for the built-in structure of the MRI dataset. KNN uses the proximity principle of prediction according to the features of neighboring data points. The images are classified by determining the nearest neighbors of an MRI pattern in the feature space, with closeness defined by a chosen distance metric. KNN provides an intuitive nonparametric approach to predict esophageal cancer, which dynamically adapts to the underlying patterns in the training data. If this method could identify even the smallest details in the MRI scans, our predictive model's accuracy and reliability would rise [30].

3.5. Recurrent Neural Networks (RNN)

Recurrent neural networks (RNNs) offer a compelling option for examining the complex field of esophageal cancer prediction because they are built to extract temporal dependencies from sequential data. Even though MRI scans continue to be our primary focus, the temporal dimension is crucial when taking into account longitudinal studies or dynamic changes in esophageal conditions over time. In addition, because RNNs have memory cells, the model can remember data from earlier scans and understand how the disease is changing over time. This is especially helpful when the rate at which esophageal cancer is progressing is a significant factor [31]. We anticipate that the addition of RNNs to our repertoire of machine learning techniques will improve our model's predictive ability by allowing it to understand the temporal subtleties found in the dataset.

4. RESULT AND DISCUSSION

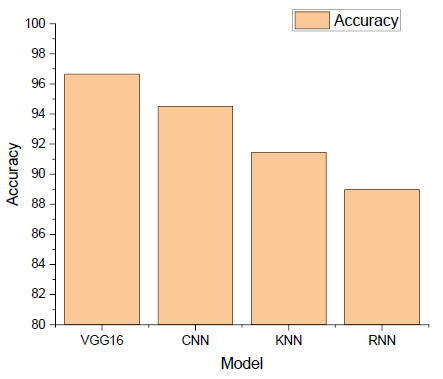

Furthermore, to ascertain each machine learning model's prognostic significance for esophageal cancer, it has undergone rigorous training, assessment, and analysis. With an accuracy of 96.66%, the VGG16 model is the best-performing model, which is shown in Fig. (2). This deep learning architecture recognizes intricate patterns in MRI scans and accurately classifies esophageal cancer by using pre-trained weights and sophisticated feature extraction capabilities.

The accuracy of 94.5% is exhibited by the Convolutional Neural Network (CNN) model, which is ranked second. In addition, because CNNs were designed with image analysis in mind, their capacity to identify spatial patterns in esophageal scans improves predictive performance. The K-Nearest Neighbors (KNN) and Recurrent Neural Network (RNN) algorithms have accuracy percentages of 91.44% and 88.97%, respectively. KNN's proximity-based learning, which is predicated on the characteristics of neighboring data points, makes it effective at identifying relevant patterns in the dataset. Meanwhile, because the RNN is designed to interpret temporal dependencies, it does a great job of identifying ever-finer details in esophageal conditions over time. Fig. (2) presents a graphic comparison of the performance scores and the degree to which each model was able to identify responses associated with esophageal cancer.

| Model | Precision | Recall | F1 Score | Accuracy |

|---|---|---|---|---|

| VGG16 | 97.0 | 95.0 | 96.0 | 96.66 |

| CNN | 95.0 | 93.0 | 94.0 | 94.5 |

| KNN | 92.0 | 90.0 | 91.0 | 91.44 |

| RNN | 89.0 | 87.0 | 88.0 | 88.97 |

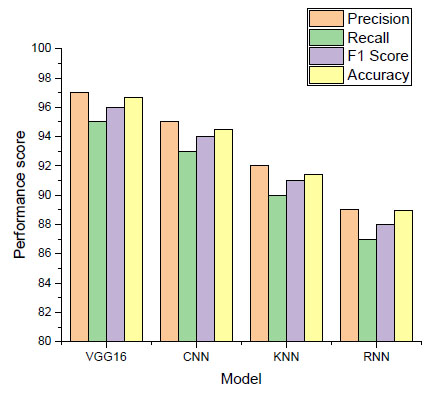

A thorough analysis of the machine learning models we used to identify esophageal cancer can be found in the performance score in Table 2. The predictive power of the VGG16, CNN, KNN, and RNN models for malignant responses from MRI scans is demonstrated by comparing them to several important metrics. Fig. (3) shows the result of the performance score with different evaluation matrices.

Accuracy of the machine learning model.

Performance score of machine learning model.

As depicted in Fig. (3), our best-performing model, VGG16, can distinguish between cancerous cases and positive predictions with a precision of 97.0%. The 95.0% recall shows how well VGG16 can explain a significant percentage of real positive cases. The F1 score indicates a uniform distribution of performance, with a harmonic mean of precision and recall of 96.0%. These outstanding results show that VGG16 is a valid diagnostic tool for esophageal cancer, and its overall accuracy is 96.66%. The second CNN model has a 94.0% F1 score, 93.0% recall, and 95.0% precision, making it an extremely proficient image analysis model. With an accuracy rate of 94.5%, this model shows its high efficacy in identifying spatial patterns in MRI scans.

With proximity-based learning, the KNN algorithm achieves impressive results: 91.0% F1 score, 90.0% recall, and 92.0% precision. It proved how effectively it could use nearby data points to identify pertinent patterns in the dataset, achieving an accuracy of 91.44%. An examination of the RNN's 88.0% F1 score, 89.0% precision, and 87.0% recall reveals that it fits the temporal dependency interpretation well. With an 88.97% accuracy rate, it can identify progressively minute variations in esophageal conditions.

CONCLUSION

In conclusion, our work uses various machine learning models to try and modify the way that esophageal cancer is diagnosed. The results Table 2 demonstrates how well VGG16, CNN, KNN, and RNN predict cancerous responses from MRI scans, as demonstrated by their outstanding performance scores. With an amazing accuracy of 96.66%, VGG16 beats the competition; CNN comes in second at 94.5%. The models' distinct strengths are, recognizing temporal dependencies, leveraging proximity-based learning, and capturing spatial patterns, and are emphasized by their strong precision, recall, and F1 scores. These discoveries could greatly improve the early identification and categorization of esophageal cancer, giving medical professionals useful resources to enhance patient outcomes. The ongoing development of diagnostic procedures has advanced significantly with the combination of machine learning techniques, providing a more accurate, efficient, and user-friendly means of diagnosing esophageal cancer. Every technique has advantages of its own. The results of this work will not only benefit the particular field of esophageal cancer but also serve as a model for applying cutting-edge computational methods in more general cancer research and precision medicine endeavors as we navigate the challenging terrain of medical image analysis.

For future research, we propose the following directions:

Enhanced Model Integration

Combining more advanced and diverse machine learning algorithms to further improve diagnostic accuracy and robustness.

Larger and Diverse Datasets

Utilizing larger, more diverse datasets to improve the generalizability and reliability of our models across different populations and imaging modalities.

Explainable AI (XAI) Implementation

Incorporating XAI techniques to make the model decisions more transparent and understandable to clinicians, enhancing trust and adoption in clinical settings.

Real-time Diagnostics

Developing real-time diagnostic tools that can be seamlessly integrated into clinical workflows to provide instant and reliable cancer detection.

Cross-disciplinary Collaboration

Encouraging collaborations between data scientists, clinicians, and researchers to explore innovative approaches and validate the practical utility of our models in real-world clinical environments.

These future directions will ensure continuous improvement and innovation in the field, ultimately leading to better patient care and outcomes.

AUTHOR’S CONTRIBUTION

It is hereby acknowledged that all authors have accepted responsibility for the manuscript's content and consented to its submission. They have meticulously reviewed all results and unanimously approved the final version of the manuscript.

LIST OF ABBREVIATIONS

| CNN | = Convolutional Neural Network |

| MLP | = Multi-Layer Perceptron |

| XAI | = Explainable Artificial Intelligence |

| SVM | = Support Vector Machines |

| ML | = Machine Learning |

| ANN | = Artificial Neural Network |

| RF | = Random Forests |

| CNN | = Convolutional Neural Networks |